Although crackles have long been regarded as a hallmark finding in physical examinations, a new study has revealed their unreliability not only among human physicians but also in artificial intelligence systems.

Auscultation has long been a valuable tool for diagnosing diseases and assessing their severity in a real-time, non-invasive, and cost-effective manner. However, the reliability of breath sound interpretation is heavily dependent on physicians’ experience, preferences, and auscultatory skills. Additionally, the inherent characteristics of adventitious breath sounds pose significant classification challenges. More importantly, artificial intelligence (AI) encounters similar difficulties.

In collaboration, the Emergency Department of National Taiwan University Hospital Hsinchu Branch and the Department of Electrical Engineering at National Tsing Hua University established an online breath sound database named the Formosa Archive of Breath Sound.

This database comprises 11,532 breath sound recordings, all captured in the emergency department with clinical fidelity. Leveraging this extensive dataset and advanced data augmentation techniques—including Spec Augment, Gamma Patch-Wise Correction Augmentation, and Mixup—the team developed an AI system for breath sound identification with performance comparable to human physicians.

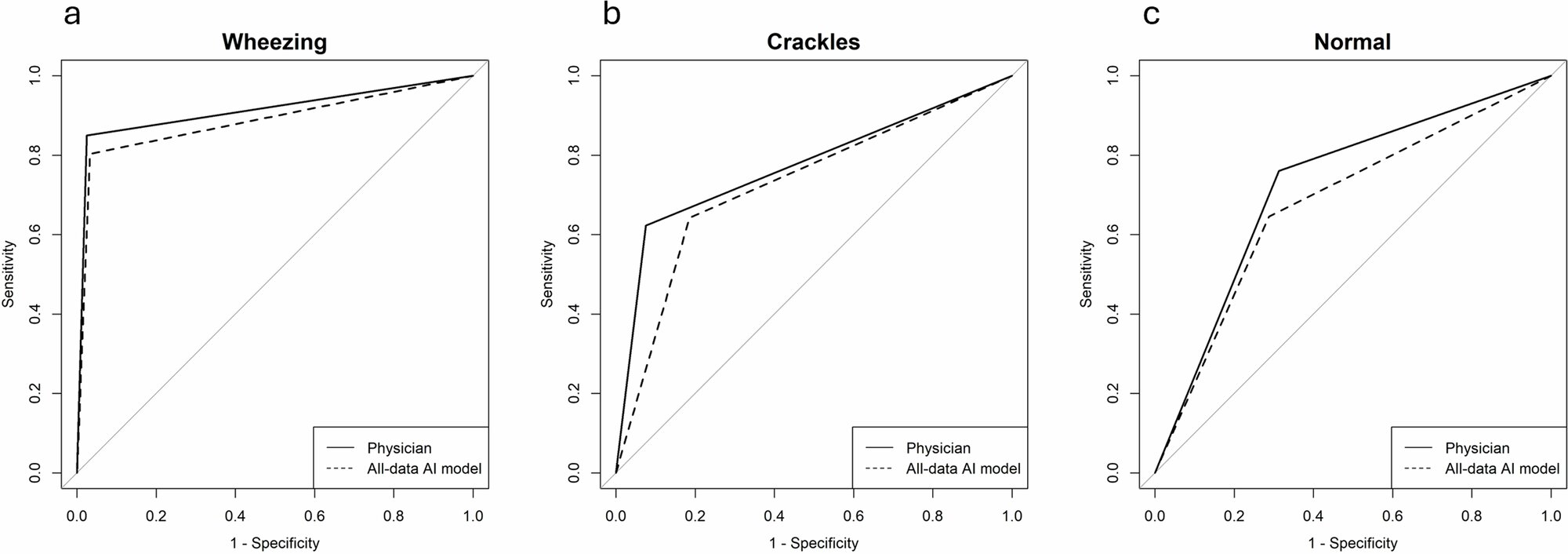

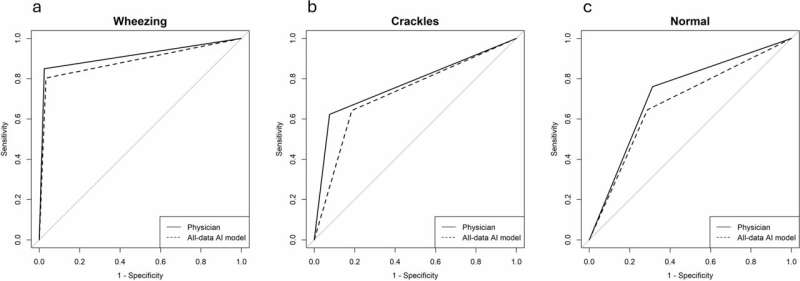

To evaluate performance, both physicians and AI systems were tasked with identifying abnormal breath sounds. Crackles, a challenging sound to recognize due to its discontinuous, transient nature and lack of musical tonal quality (unlike wheezes), proved problematic. Surprisingly, AI systems did not outperform human physicians in addressing these challenges. Lower specificity, inter-rater agreement, and area under the ROC curve were observed for crackles in the AI analyses as well.

These findings, which underscore the shared limitations of human and AI auscultation in distinguishing crackles, were published on October 15, 2024, in the journal npj Primary Care Respiratory Medicine.

“This shared weakness renders crackles an unreliable physical finding. Consequently, medical decisions based on crackles should be approached with caution and verified through additional examinations. Moreover, the low signal-to-noise ratio, crackle-like noise artifacts, and irregular loudness contribute to the difficulty AI systems face in identifying crackles.

“Future AI training for breath sound identification should focus more intensively on improving the recognition of crackles,” said Dr. Chun-Hsiang Huang.

More information:

Chun-Hsiang Huang et al, The unreliability of crackles: insights from a breath sound study using physicians and artificial intelligence, npj Primary Care Respiratory Medicine (2024). DOI: 10.1038/s41533-024-00392-9

Provided by

National Taiwan University

Citation:

Study shows AI and physicians have equal difficulty identifying crackles when analyzing breath sounds (2024, November 29)

retrieved 29 November 2024

from https://medicalxpress.com/news/2024-11-ai-physicians-equal-difficulty-crackles.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.