Report Highlights Security Risks of Open Source AI

A new report, “The State of Enterprise Open Source AI,” from Anaconda and ETR, surveyed 100 IT decision-makers on the key trends shaping enterprise AI and open source adoption while also underscoring the critical need for trusted partners in the Wild West of open source AI.

Security in open-source AI projects is a major concern, as the report reveals more than half (58%) of organizations use open source components in at least half of their AI/ML projects, with a third (34%) using them in three-quarters or more.

Along with that heavy usage comes some heavy security concerns.

“While open source tools unlock innovation, they also come with security risks that can threaten enterprise stability and reputation,” Anaconda said in a blog post. “The data reveals the vulnerabilities organizations face and the steps businesses are taking to safeguard their systems. Addressing these challenges is vital for building trust and ensuring the safe deployment of AI/ML models.”

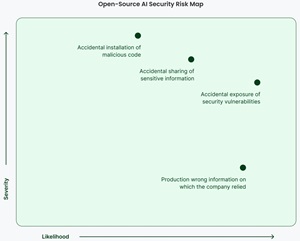

The report itself details how open source AI components pose significant security risks, ranging from vulnerability exposure to the use of malicious code. Organizations report varied impacts, with some incidents causing severe consequences, highlighting the urgent need for robust security measures in open source AI systems.

In fact, the report finds 29% of respondents say security risks are the most important challenge associated with using open source components in AI/ML projects.

“These findings emphasize the necessity of robust security measures and trusted tools for managing open source components,” the report said, with Anaconda helpfully volunteering that its own platform plays a vital role by offering curated, secure open source libraries and enabling organizations to mitigate risks while enabling innovation and efficiency in their AI initiatives.

Other key data points in the report covering several areas of security include:

-

Security Vulnerability Exposure:

- 32% experienced accidental exposure of vulnerabilities.

- 50% of these incidents were very or extremely significant.

-

Flawed AI Insights:

- 30% encountered reliance on incorrect AI-generated information.

- 23% categorized these impacts as very or extremely significant.

-

Sensitive Information Exposure:

- Reported by 21% of respondents.

- 52% of cases had severe impacts.

-

Malicious Code Incidents:

- 10% faced accidental installation of malicious code.

- 60% of these incidents were very or extremely significant.

The lengthy and detailed report also delves into topics like:

- Scaling AI Without Sacrificing Stability

- Accelerating AI Development

- How AI Leaders Are Outpacing Their Peers

- Realizing ROI from AI Projects

- Challenges with Fine-Tuning and Implementing AI Models

- Breaking Down Silos